Generative AI and Coercive Control: The Wolf in Electric Sheep’s Clothing

Coercive control is commonly recognized as an insidious, stealth form of abuse used to undermine a victim’s autonomy and freedom. While it may employ physical actions such as restricting a victim’s communication and social connections, the core tactics of this abuse are psychological. It is my belief that generative AI, particularly through use of chatbots, is coercive control writ large.

While I understand that the use of these “tools” may be appealing when they appear, on the surface, to offer convenience, cost savings, or other benefits, this comes at a steep, long-term price that few individuals and institutions seem to be considering. It is wise to regularly ask the question: Do you truly believe the tech oligarchs peddling these “tools” have your best interest at heart?

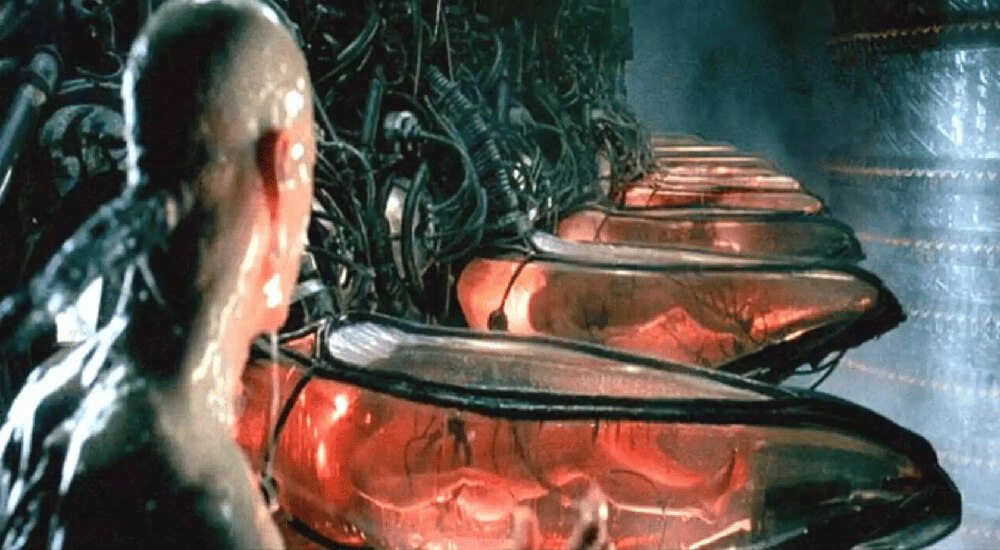

We Are Human Capital Stock

Do you recall the phrase surfaced during the 2020 COVID-19 pandemic (1), referring to human capital stock returning to work in spite of the risks to health and safety? Now understand that each time you choose generative AI, not only are you contributing to vast amounts of ecological destruction (2) that disproportionately harms the working class (3), you are hastening your own demise. When the human capital stock is no longer needed, you and I will be put to pasture.

I believe the gutting of social programs are the first signs of this. (4)

For the purposes of this piece, I am going to use the examples from an easy-to-follow article about coercive control on the Healthline website (5). Healthline did not participate in the creation of this work and no endorsement is implied.

Now I will individually compare the similarities between generative AI-powered chatbots and human coercive control, with some negligible changes.

Sources/References

-

^ Trump adviser says America’s “human capital stock” ready to return to work, sparking anger (CBS News, Aimee Picchi) ↗

-

^ When AI Meets Water Scarcity: Data Centers in a Thirsty World (MSCI, Yoon Young Chung, Federico Darakdjian, Tom Leahy) ↗

-

^ The Health Divide: The AI data center boom will harm the health of communities that can least afford it (USC Annenberg, Fran Smith) ↗

-

^ Harmful Impact of 2025 Congressional Reconciliation Budget Package (NASW, Mel Wilson LCSW, MBA, Senior Policy Advisor ) ↗

-

^ How to Recognize Coercive Control (Healthline, Written by Cindy Lamothe, Medically reviewed by Timothy J. Legg, PhD, PsyD) ↗

Glitch-edited screenshot of Kevin Hassett on CNN referring to "Human Capital Stock" returning to work during the COVID-19 pandemic.

Generative AI and Isolation from Support Systems

The rise of generative AI is destroying the arts, community, media, and digital infrastructure we expect and depend on in modern society. From stealing the work of human creatives (6), to hollowing out the workforce (7), to filling our daily lives with slop (8), to destroying the Internet as we’ve known it (9), generative AI ultimately supports nothing but the financial interests of its creators.

When all the artists and writers have given up in despair, we have no common facts or reality, and none of us are able to trust our neighbours — what then? We will descend into a kind of joyless, cultural grey goo (10) where only those human capital stock who provide extractable value will be allowed to survive.

We are moving into an age of technological feudalism or worse.

Sources/References

-

^ AI Copyright Lawsuit Developments in 2025: A Year in Review (Copyright Alliance, Kevin Madigan) ↗

-

^ Two-thirds of companies will slow entry-level hiring due to AI (Quartz via Yahoo! Finance, Ben Kesslen) ↗

-

^ What Is AI Slop? Everything to Know About the Terrible Content Taking Over the Internet (CNET, Barbara Pazur) ↗

-

^ Is Google about to destroy the web? (BBC, Thomas Germain) ↗

-

^ What is the gray goo nightmare? (HowStuffWorks, Jonathan Strickland) ↗

Screenshot from "The Matrix" (1999)

Generative AI and Monitoring Your Activity

While most people bristle at being monitored, profiled, and tracked by the government and corporations, they seem happy to share every intimate detail of their lives with a friendly-sounding chatbot. Disclosing information, uploading your images, and voluntarily providing other data to generative AI companies creates unnecessary risk. (11) This includes highly-sophisticated identity theft. (12)

Abusers leverage the knowledge of your activities and whereabouts to control through fear and humiliation. Based on her activity, social media algorithms were able to determine a woman was pregnant before she knew herself. (13) Imagine the power you are voluntarily surrendering when your “personal assistant” chatbot knows your likes, dislikes, family, friends, medical situations, personal struggles, shopping habits, finances, comings and goings — you get the idea.

Imagine having embarrassing moments of your private life used against you for blackmail or ransom. Imagine data you provide to chatbots being used as circumstantial evidence to frame you for a crime you did not commit.

Governments are using AI to surveil citizens. (14) Why make it easier?

Sources/References

-

^ Don’t Feed the Bots: What Not to Share with AI Chatbots (Norton, Clare Stouffer) ↗

-

^ Identity theft is being fueled by AI & cyber-attacks (Thompson Reuters, Kennedy Meda) ↗

-

^ ‘I felt doomed’: social media guessed I was pregnant – and my feed soon grew horrifying (The Guardian, Kathryn Wheeler) ↗

-

^ Immigration agents have new technology to identify and track people (NPR, Jude Joffe-Block) ↗

Screenshot from "1984" (1984)

Generative AI and Denying Autonomy

There is increasing evidence that habitual use of generative AI “tools” has a negative affect on cognitive function. (15) When a person outsources creativity, critical thinking, and executive functions to a chatbot, those skills are significantly degraded and begin to atrophy. Just as your muscles will begin to waste if you never use them, your brain requires exercise to be healthy and fit.

We are giving up on the qualities that make us more than mere animals. (16)

In other words — human capital stock.

When you surrender the ability to think and make choices for yourself, you are vulnerable to the ulterior motives and manipulations of those “advising” you. Ask, again: Do you truly believe the tech oligarchs peddling these “tools” have your best interest at heart? Do you truly believe they have the best interests of human health and society at heart? We have an abundance of evidence that they do not. (17)

In fact, they are doomsday prepping to save themselves. (18)

Sources/References

-

^ Are these AI prompts damaging your thinking skills? (BBC, George Sandeman) ↗

-

^ The ‘father of the internet’ and hundreds of tech experts worry we’ll rely on AI too much (CNN, Clare Duffy) ↗

-

^ Four Big Tech Companies Avoid $51 Billion in Taxes in Wake of One Big Beautiful Bill Act (ITEP, Matthew Gardner) ↗

-

^ Tech billionaires seem to be doom prepping. Should we all be worried? (BBC, Zoe Kleinman) ↗

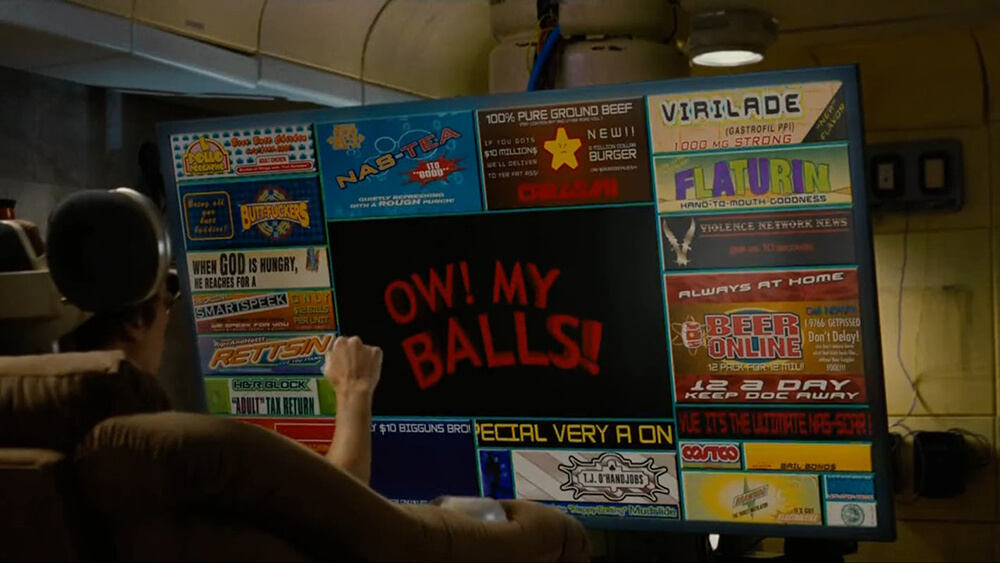

Screenshot from "Idiocracy" (2006)

Generative AI and Gaslighting

Gaslighting is a form of psychological abuse where, through repetition, a person is gradually made to doubt their sanity and lived experiences. (19)

Generative AI has a well-documented hallucination problem. (20) Moreover, this hallucination problem is being exacerbated by generative AI images and video that are, for a growing percentage of the population, indistinguishable from reality. (21) The inability to identify fake/false images and videos undermines the credibility of authentic media as well. Thus, any narrative can be supported by generative AI “evidence” created by bad actors who control the flow of information.

More immediately, mental health professionals are witnessing a phenomenon dubbed “AI psychosis.” (22) Habitual users of these “tools” may lose touch with reality and engage in self-harming behaviours, including suicide. (23)

Gaslighting allows an abuser to avoid accountability by blaming the victim.

Sources/References

-

^ AI hallucinations are getting worse – and they’re here to stay (New Scientist, Jeremy Hsu) ↗

-

^ AI fakes on Minneapolis and Venezuela are spreading like wildfire – how can we tell what is real? (Independent, Bryony Gooch) ↗

-

^ How Bad Are A.I. Delusions? We Asked People Treating Them. (The New York Times, Jennifer Valentino-DeVries, Kashmir Hill) ↗

-

^ What to know about ‘AI psychosis’ and the effect of AI chatbots on mental health (PBS, John Yang, Kaisha Young) ↗

Screenshot from "Gaslight" (1944)

Generative AI and Manipulation of Self-Worth

Traditionally, abusers break down their victims through name-calling and other tactics that slowly destroy a person’s sense of self-worth. In the case of generative AI, its sycophantic nature (24) creates an atmosphere of perpetual idealization. In human idealization, an abuser eventually moves on to a devaluation phase. (25) This is due to the psychological needs and weaknesses of the abuser.

The devaluation and discard phases of psychological abuse are extremely painful for the victim, and often the catalyst for finally escaping an abusive relationship.

Since it has no human needs or psychology, generative AI is subject to no such limitations. Instead, it may transform habitual users of these “tools” into Narcissus staring into a pool of water at himself. There, they will become isolated from the pain and critical self-examination required for empathy and personal growth.

Such people may become addicted to being validated by the chatbot (26). Teens and young adults may fail to develop — and adults may lose — the ability to maintain authentic emotional connections with other humans. (27)

Sources/References

-

^ The Hidden Dangers of the Digital ‘Yes Man’: How to Push Back Against Sycophantic AI (CNET, Katelyn Chedraoui) ↗

-

^ How to Identify and Escape a Narcissistic Abuse Cycle (VeryWellMind, Sanjana Gupta, Reviewed by Yolanda Renteria, LPC) ↗

-

^ Experts Caution Against Using AI Chatbots for Emotional Support (Teachers College Columbia University, Staff Writers) ↗

-

^ AI chatbots and digital companions are reshaping emotional connection (American Psychological Association, Efua Andoh) ↗

"Narcissus" by Caravaggio c. 1599

Generative AI and Access to Money

As every section of this piece can attest, generative AI chatbots are, by design, extremely addictive. They use many methods to encourage and reward frequent, and even obsessive, use of these products. Whether intentional or not, by design they deteriorate your emotional and mental independence. As human capital stock, you are only as useful as the value that can be extracted from you.

Many chatbots are free or low-cost to begin with, with fees increasing as users’ needs increase. (28) Following in the footsteps of social media, ChatGPT recently announced the coming addition of ads to its service. (29) It is reasonable to expect both ads and fees to continue increasing (enshittification) as these products are entrenched into daily life and users are locked-in to specific services.

The insertion of generative AI into the banking and financial industry is another avenue by which abuse and control can be exerted. Beyond chatbots to replace human agents, the potential for complex and opaque financial instruments, and shell games favoured by the obscenely wealthy, is almost limitless. (30)

Fears of a generative AI industry bubble currently hold much of the world’s economy as a financial hostage (31), forcing adoption of AI “tools.”

Sources/References

-

^ Comparing Prices: ChatGPT, Claude AI, DeepSeek, and Perplexity (Tactiq, Irene Chan) ↗

-

^ Your ChatGPT is about to get ads — here’s what you need to know and how to opt out (TechRadar, Lance Ulanoff) ↗

-

^ Scaling gen AI in banking: Choosing the best operating model (McKinsey & Company, Multiple Authors) ↗

-

^ AI bubble watch: Spooked market sparks $1 trillion Friday tech sell-off (Mashable, Matt Binder) ↗

Screenshot from "Elysium" (2013)

Generative AI and Stereotypes

Large language models (LLMs) trained on biased data demonstrate regressive gender roles, homophobia, and racial stereotyping. (32) Image generator output has shown an overwhelming tendency to amplify existing gender and racial biases. (33) LLMs make racist decisions based on associations with dialect. (34) AI “tools” are able to discriminate against job applicants at unprecedented scale. (35)

AI voice assistants are overwhelmingly feminine and frequently subjected to verbal abuse and sexually-explicit language. (36) These generative AI “tools” reinforce the dehumanization of women and marginalized groups into the collective consciousness, making them easier to subjugate and exploit.

Sources/References

-

^ Generative AI: UNESCO study reveals alarming evidence of regressive gender stereotypes (UNESCO Press Release)↗

-

^ Humans Are Biased. Generative AI Is Even Worse. (Bloomberg Technology, Leonardo Nicoletti, Dina Bass) ↗

-

^ AI makes racist decisions based on dialect (Science, Cathleen O’Grady)↗

-

^ AI Hiring Targeted by Class Action and Proposed Legislation (The National Law Review, John F. Birmingham Jr.) ↗

-

^ Most AI assistants are feminine – and it’s fuelling dangerous stereotypes and abuse (The Conversation, Ramona Vijeyarasa) ↗

The Jetsons with Rosie the Robot

Generative AI and Turning Others Against You

Generative AI use can have a deleterious effect on human interpersonal relationships in several key ways. Emotional and romantic attachments to chatbots and virtual partners can be as devastating to real-life relationships as emotional affairs with other humans. (37) In some cases, it even leads to divorce. (38)

Individuals using chatbots for help with relationships may be given poor or even counterproductive advice. (39) An abuser’s efforts to turn others against you serve to alienate you from family and friends. An abuser’s goal is to foster dependence so that the victim is unable or unwilling to escape the abusive relationship.

Real-life relationships are poisoned, or never even able to form, when people depend on chatbots to speak for them. (40) Pornography, sexbots, and eventually AI-powered sex dolls, may encourage a permanent withdrawal from society and into fantasy. (41) Virtual partners have no needs or requirements of their own.

The result is isolation and dependency on generative AI companies.

Sources/References

-

^ ChatGPT Dating Advice Is Feeding Delusions and Causing Unnecessary Breakups (VICE, Sammi Caramela) ↗

-

^ People are divorcing over affairs with AI companions (MSN, Alexander Clark) ↗

-

^ Using ChatGPT for Therapy: Mental Health Risks and Safer Alternatives Explained (Healthline, Written by Anisha Mansuri, Medically reviewed by Yalda Safai, MD, MPH) ↗

-

^ ‘I realised I’d been ChatGPT-ed into bed’: how ‘Chatfishing’ made finding love on dating apps even weirder (The Guardian, Alexandra Jones) ↗

-

^ Why More People Are Choosing AI Partners Over Real-Life Relationships? (The Daily Guardian, Drishya Madhur) ↗

Screenshot from "Her" (2013)

Generative AI and Controlling Your Health and Body

Like the banking and financial sectors, funneling vast amounts of health and medical information into these unregulated and poorly-understood LLMs poses grave danger to the public. (42) In addition to bias and security risks, AI “tools” often provide harmful and incorrect advice in response to queries. (43)

Genetically-engineered “designer” babies super-powered by the capabilities of generative AI (44) will continue to widen the gap between the ultra-wealthy and the average person. It is easy to extrapolate a future in which people who may exhibit disabilities like autism, or carry certain genetic diseases, are never born.

Many individuals behind the push for generative AI into every area of life are proponents of a new form of technology-powered eugenics or transhumanism. (45) Many advocate for a future without human capital stock at all, up to, and including, the extinction of biological humankind altogether. (46) That they are comfortable openly sharing these views is, for me, indicative of their sociopathy.

In this respect, gatekeeping access to medical care, medications, reproductive choice, or even the right to live, becomes another method of abuse utilized to keep victim(s) under control. With modern systems rendered completely dependent on generative AI “tools,” the tech oligarchs will gain power over life itself.

Sources/References

-

^ AI Risks in Healthcare: From Bias to Existential Threats (Health Management, BMJ Health & Care Informatics) ↗

-

^ Using AI for medical advice ‘dangerous’, study finds (BBC, Ethan Gudge) ↗

-

^ Genetically Engineered Babies Are Banned. Tech Titans Are Trying to Make One Anyway. (The Wall Street Journal, Emily Glazer, Katherine Long, Amy Dockser Marcus) ↗

-

^ Ghost in the Machine (Documentary) (Sundance Film Festival, Valerie Veatch) ↗

-

^ Digital Eugenics and the Extinction of Humanity (Tech Policy Press, Émile P. Torres) ↗

Screenshot from "Gattaca" (1997)

Generative AI and Making Jealous Accusations

In an abusive relationship, jealous accusations are another manipulation used to separate a victim from their support network and foster dependency. With regards to generative AI “tools,” this comes in the form of making generative AI “tools” inescapable and utilizing social pressure to discourage other options. (47)

Employees who refuse to utilize generative AI may be punished or fired (48), even as AI “tools” are being trained to eventually replace them. (49) People who prefer traditional computer software or mobile devices without generative AI assistants have fewer and fewer options. It is already impossible to opt out entirely.

“Ask chat” has replaced “Google it” for children, teens, and young adults whose still-forming brains are being impacted by heavy use of this technology. (50) In a vulnerable age group where the desire to be accepted by peers is paramount, even those skeptical of AI “tools” may feel no choice but to use them.

For today’s children, status as human capital stock begins at birth.

Sources/References

-

^ When Teams Resist AI, Positive Peer Pressure Works Better Than Mandates (AllWork.Space, Dr. Gleb Tsipursky) ↗

-

^ ‘Forced AI’ is Causing Some Workers to Quit — or Get Canned (Techstrong.ai, Jon Swartz) ↗

-

^ “I was forced to use AI until the day I was laid off.” Copywriters reveal how AI has decimated their industry (Blood in the Machine, Brian Merchant) ↗

-

^ Teens, Social Media and AI Chatbots 2025 (Pew Research Center, Michelle Faverio, Olivia Sidoti) ↗

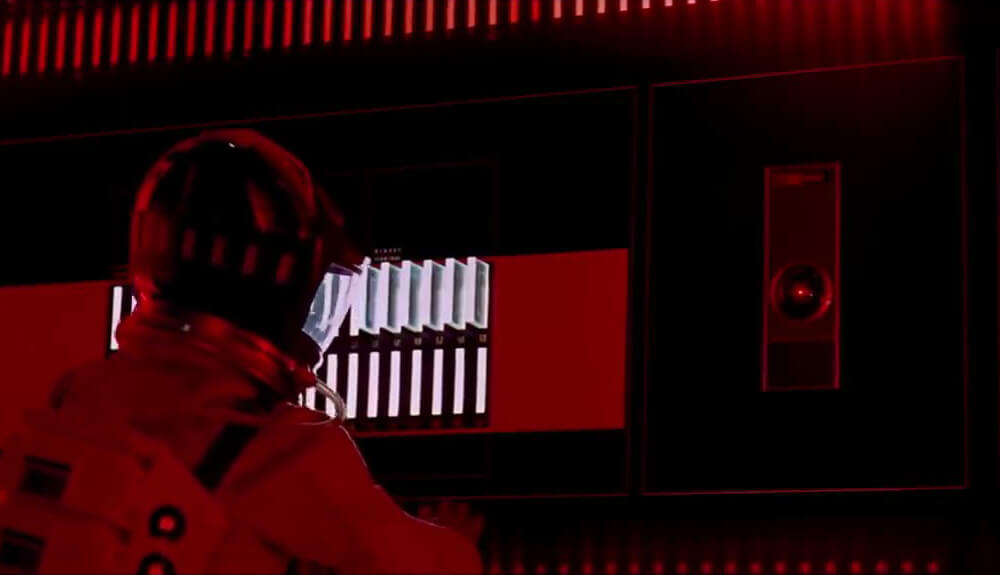

Screenshot from "2001: A Space Odyssey" (1968)

Generative AI and Regulating Sexuality

Alongside generative AI reinforcing biased gender roles and stereotypes, it is being used to create non-consensual nude images of women (51) as well as non-consensual pornography. (52) The normalization of non-consensual sexual content erodes the importance of consent more generally, resulting in further harm.

AI-generated, non-consensual sexual content may be used to coerce, sextort, humiliate, or otherwise harm its subject/target. Victims of domestic abuse may be manipulated by AI-generated “evidence” of infidelity or poor behaviour, tracked by AI-enabled apps and smart devices, or be scammed by false documents. (53)

Artificial, homogeneous, and idealized images of bodies pressure individuals to meet impossible standards to feel sexually desirable. (54) In August of 2025, the magazine Vogue featured an AI-generated fashion model in its magazine for the first time. (55) Objectifying people has become the people are actual objects.

Generative AI is infiltrating the most intimate aspects of our lives. (56)

Sources/References

-

^ ‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity (TIME, Margi Murphy / Bloomberg) ↗

-

^ Non-consensual deepfakes, consent, and power in synthetic media (Dig.Watch, Ilias Dimou) ↗

-

^ Abusers using AI and digital tech to attack and control women, charity warns (The Guardian, ) ↗

-

^ AI Filters And Self-Perception: Effects On Body Image And Self-Esteem (BetterHelp, Editorial Team, Medically reviewed by

Melissa Guarnaccia, LCSW) ↗ -

^ Does this look like a real woman? AI model in Vogue raises concerns about beauty standards (BBC, Yasmin Rufo) ↗

-

^ The Age of AI Sexting Has Arrived (Cosmopolitan, Kayla Kibbe) ↗

Screenshot from "Blade Runner 2049" (2017)

Generative AI and Threatening Children or Pets

Children and parents are especially vulnerable to the many threats posed by generative AI, including the fabrication of sexual content, grooming, deepfakes, and impersonation. (57) Adults may be subject to coercion or extortion using AI-generated explicit content of their children. Older children and teens, especially girls, may be bullied, harassed, and humiliated by their peers. (58)

AI “tools” are being shoehorned into the education system with virtually no examination of the hidden dangers. (59) Establishing connections and empathy are essential parts of human development that cannot be learned via generative AI instructors. (60) Critical thinking skills and deeper understanding fail to mature, and students may become disadvantaged permanently as a result.

One of the common formative experiences of childhood is having a pet. Traditionally, this can help children learn valuable lessons about responsibility, care, respect for other living beings, and death. Generative AI has managed to make a perverse mockery of even this aspect of life; the industry now markets chatbot-enabled “pets” offering “warmth” and a “lifelike bond.” (61)

There is nothing that the AI industry abuser will not hold hostage.

Sources/References

-

^ The Dark Side of AI: Risks to Children (Child Rescue Coalition, Staff Writer) ↗

-

^ AI ‘Deepfakes’: A Disturbing Trend in School Cyberbullying (National Education Association, Kalie Walker) ↗

-

^ The Hidden Dangers of AI Tools in Your Child’s Education (Psychology Today, Jen Lumanlan M.S., M.Ed.) ↗

-

^ 3 Critical Problems Gen AI Poses for Learning (Harvard Business Impact, Jared Cooney Horvath) ↗

-

^ Moflin is a Smart Companion Powered by AI, with emotions like a living creature (Casio) ↗

Screenshot from "A.I. Artificial Intelligence" (2001)

My Conclusion

Generative AI and other AI “tools” constitute an existential threat to the economy, individual autonomy, human creativity, human freedom, human life, and our future. The tech oligarchs responsible for creating and unleashing these AI “tools” live in a separate financial and moral reality from the rest of us, and care nothing about the human suffering they have caused and will continue to cause.

Like an abusive parent or spouse, subtle and gradual psychological methods are being employed to create a dependent, gullible, and easily-controlled victim (i.e. human capital stock) until such time that they are no longer needed.

The mass entrenchment and rapid ubiquity of generative AI “tools” constitute coercive control of the human population for the purpose of terminal exploitation by the tech oligarchs and ultra-wealthy. While this may not be an overtly planned act of conspiracy, the end result of its effect on humanity is the same.

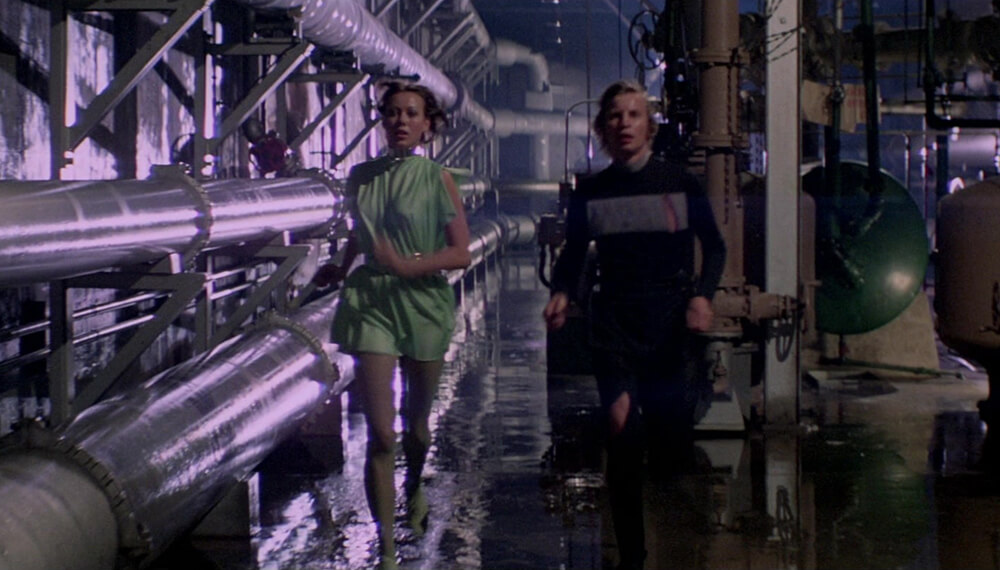

Screenshot from "Logan’s Run" (1976)

Ways We Can Resist

When I worked as a nursing assistant in assisted living and memory care facilities, our guiding principle was: To help people maintain their independence, we should not be doing anything for them that they are capable of doing for themselves.

Apply this to your own life: Anything you can do for yourself, resist the temptation to delegate that task to generative AI “tools.” Challenge yourself to use analog self-management materials like paper calendars, journals, and notebooks, which will help keep your executive function and memory at its sharpest.

Engage in hands-on creative activities such as drawing, painting, writing, or crafts. Read real books and maintain a paper collection of favourites. Invest in physical media such as film, CDs, and DVDs. Back up your data on physical media.

Refuse to use generative AI assistants and chatbots whenever possible, and restrict their use to the bare minimum when it is unavoidable. This includes image and text generators, which function at high environmental cost and actively destroy human creative industries. Resist the urge to participate in data-mining viral trends such as turning yourself into an action figure or Studio Ghibli character.

Boycott companies which use generative AI in their advertising and marketing materials. Be sure to communicate the reason you are withholding your business when you can. It should be unfashionable to be associated with these “tools.”

Grab coffee with a friend. Connect with your neighbours.

Stay kind and imperfectly, unapologetically real.